ChatGPT is Not A Panacea

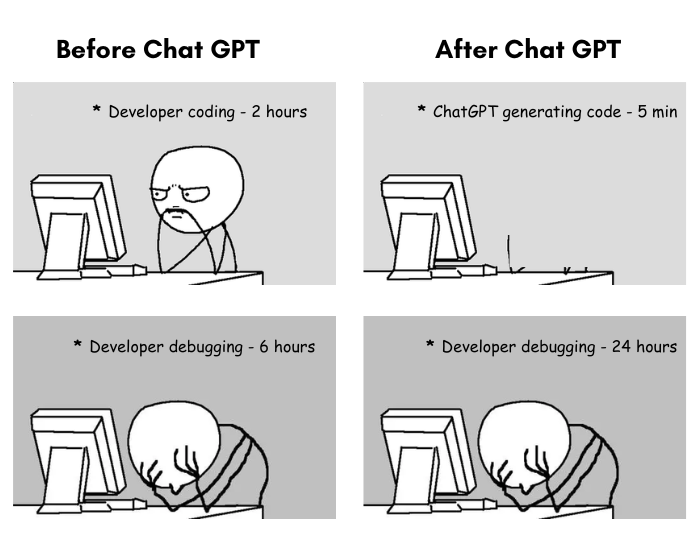

Everyone and their mom has been talking about ChatGPT and how it can be used to automate anything from your business to customer support, and even investing. While the tech does save time when used correctly, it's not the panacea that everyone from Alex Hormozi to Cody Sanchez has been touting it out to be. Generative AI has limtations, and they're quite severe. Before I go any further, it's important to understand what an LLM (large language model) actually is and how it works. An LLM is basically a statistical auto-complete engine powered by neural networks. If that set off some alarm bells, it should.

Everyone and their mom has been talking about ChatGPT and how it can be used to automate anything from your business to customer support, and even investing. While the tech does save time when used correctly, it's not the panacea that everyone from Alex Hormozi to Cody Sanchez has been touting it out to be. Generative AI has limtations, and they're quite severe. Before I go any further, it's important to understand what an LLM (large language model) actually is and how it works. An LLM is basically a statistical auto-complete engine powered by neural networks. If that set off some alarm bells, it should.

LLMs, like regular auto-complete engines, are good for generating boilerplate content. But just like you wouldn't let Clippy (for those who still remember him) write your essay, you shouldn't let ChatGPT do so either, for the same reason. LLMs are basically an aggregated summary of content you see on the web. Without a human driving, your essay will end up being a generic summary of what any competent 12th grader could have written on the same topic with 30 minutes of research.

Additionally, there are a number of limitations with generative AI that make it unsuitable for certain tasks, regardless of how much training is done, regardless if we're talking GPT4, 5, or even 10. I've made a video talking about this very topic on YouTube: https://www.youtube.com/watch?v=NFrPf5BhmGc

The main 3 problems with generative AI are as follows:

- Hallucinations and tendency to make up information

- Lack of actual experience as perceived by humans

- Inadequate mathematical abilities

Let's dive deeper into each one:

Hallucinations and Tendency to Make Up Information

If you watch the above video, it shows a concrete example of this problem. In the video, I ask ChatGPT the population of Greater Boston, which it confidently proclaims to be over 7 million (the actual population is 4.8 million). When asked to cite its source, ChatGPT states that it fetched this information from official Census website (except Census website never states the 7 million figure, something ChatGPT is oblivious to). When asked about the actual Census page where this info was obtained from, ChatGPT provides a valid-looking URL (https://census.gov/popest/data/metro/totals/2020/index.html) linking back to a page on census.gov.

The only problem is, the page doesn't exist, nor has it ever existed, according to Wayback Machine. ChatGPT did not learn how to properly cite sources, it learned how to generate valid-looking URLs that appear to cite sources. Which brings me to the next point.

Lack of Actual Experience

ChatGPT doesn't actually understand the information it spits out, it doesn't understand the material, it only understands how someone who does understand the material would explain it. This is a subtle but important nuance, and the citation example above shows the implications of this.

ChatGPT doesn't actually understand citations, it only understands how someone would cite their sources - and that's exactly what it's doing when asked to cite its sources. Real-time acess to the web won't fix this problem. The problem isn't that the URL is out of date, the URL never existed to begin with.

This limitation is apparent when you dive into other topics as well. Ask ChatGPT, for example, how adding a certain ingredient will affect the taste of the dish. It will be able to speak about the dish and ingredient independently, but it lacks the intuition of a chef needed to understand how the ingredients interact and chemical reactions that go on during cooking. Similarly, it may be able to tell you what exercises you need to do at the gym to get fit, but it lacks any concept of form, and without proper form you'll likely hurt yourself. ChatGPT is a good starting point when you know nothing about the topic, or need to throw together some generic boilerplate. Don't expect ChatGPT knowledge to match that of an expert, however - because it doesn't understand the nuance that comes with expertise.

Inadequare Mathematical Abilities

Continuing on the topic of nuance, if you've ever asked ChatGPT to write "a couple paragraphs" or "5 bullet points", or "limit response to 500 words", you've probably noticed that ChatGPT gets the general ballpark correct but doesn't actually follow instructions. Unlike regular software, LLMs are not good at math. This is why I'm vary of tools claiming to use GPT for analytics, I have yet to see any of them work as advertised. Generative AI may be good at predicting trends, but it's not good at actual forecasts. The dangerous part is that unlike previous software, generative AI has a hard time saying "I don't know", instead it will make up an answer that sounds reasonable. And to someone who isn't familiar with the field, this could do more harm than good.

For example, ask ChatGPT about the best real estate markets to invest in in 2023, and it will spit out the top 5 markets from 2021 (back from its last data snapshot). Surely if these markets were trending up in 2021, they should still be trending in 2023 - right? Nope, all of the "top" markets it recommends (Denver, Austin, Nashville, Phoenix, Portland) are currently seeing the highest decline. What comes up quickly could fall just as quickly, especially when the interest rates skyrocket, and ChatGPT does not understand this.

ChatGPT is a great shortcut if you have no idea how to get started, or if you need to generate boilerplate, but it is just that - boilerplate. ChatGPT work should always be a side-dish to your main offering. There is no sentience within ChatGPT and anyone who thinks otherwise doesn't know what they're talking about.